In the realm of artificial intelligence, Professor Kevin Fu from Northeastern University has discovered a new, potential threat to the security of self-driving cars: the ability to make them “see” objects that aren’t really there, or what he dubs a “Poltergeist” attack.

This unexpected breakthrough reveals a startling aspect of cybersecurity: the capability to exploit the technologies we rely on in unforeseen ways.

Kevin Fu, a specialist in exploring and exploiting technologies, has developed an innovative type of cyberattack, which he calls the “acoustic adversarial” attack or more creatively, the “Poltergeist” attack.

This method doesn’t just obstruct or meddle with technology like many other forms of cyberattacks, instead, it creates what Fu describes as “false coherent realities,” or essentially, optical illusions for computers utilizing machine learning to make decisions.

Fu’s technique manipulates the optical image stabilization present in the cameras of many modern devices, including autonomous cars.

This stabilization technology normally helps in counteracting the shakiness and movement of the photographer to avoid blurry pictures.

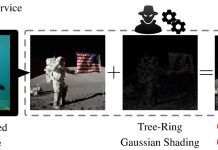

By discovering the resonant frequencies of the materials in these sensors, Fu and his team were able to project matching sound waves towards the camera lenses, causing them to blur images instead.

This is where things get spooky.

This blurring allows attackers to create fake silhouettes or blur patterns which to humans might not look like anything, but to the machine-learning algorithms in autonomous vehicles, they could be misinterpreted as people, stop signs, or other objects, potentially leading to hazardous situations.

Fu’s research allowed his team to manipulate how self-driving cars and drones interpreted their surroundings. They could add, remove, or alter the way these autonomous systems perceived objects in their environment.

For instance, a self-driving car could be made to see a stop sign where there isn’t one, or conversely, not see a real object or person, potentially leading to accidents.

Fu’s Poltergeist attack goes beyond just making machines see ghosts. It also raises significant questions about the dependability of machine learning and AI technologies.

Fu’s investigations show how susceptible AI can be to disruptions and false information, shedding light on why it’s crucial to scrutinize the reliability of these technologies.

In a world increasingly reliant on advanced technologies and AI, understanding and acknowledging the potential vulnerabilities in these systems is paramount.

If unchecked, the drawbacks and vulnerabilities in these technologies could undermine consumer confidence and lead to reluctance in adopting such technologies, possibly stagnating technological progress for years.

Professor Fu is hopeful that exposing these vulnerabilities will lead to more secure and reliable technological developments in the future.

He emphasizes that as exciting as these new technologies are, they need to be robust and resilient against various cybersecurity threats to gain acceptance and trust from consumers.

If technology developers overlook these potential flaws, we could face setbacks where groundbreaking technologies might remain unused due to lack of consumer confidence.

In conclusion, the exploration of such invisible and insidious threats to emerging technologies is crucial in advancing and adopting new, transformative innovations.

Fu’s “Poltergeist” attack brings to light unseen and overlooked vulnerabilities, providing an invaluable opportunity for technology developers to address these issues, ensuring the safety and reliability of future innovations in autonomous systems and artificial intelligence.

Follow us on Twitter for more articles about this topic.