If there’s one familiar sound whenever a volunteer tries out an interactive device that uses electrical muscle stimulation, it is probably laughter.

Even for experienced users of the technology, the sensation of a machine controlling your body feels unnatural and strange.

Something about the experience disrupts people’s sense of agency—the feeling of being in control of one’s actions—which could interfere with the technology’s potential to improve learning and make virtual reality more realistic.

As Asst. Prof. Pedro Lopes explored devices through a human-computer interface lens, first in his doctoral work at the Hasso Plattner Institute in Germany and now at the University of Chicago, he grew interested in whether agency can be measured, controlled or even restored during use of such devices.

In two recent pioneering papers, Lopes and collaborators have been on the trail of agency, using everything from pitching machines to fMRI brain scanners.

“We started by asking the question: Does electrical muscle stimulation always have to feel that unnatural, or is there anything we can do to make it feel more in tune with your own volition?” Lopes said.

“I think we just started to answer it, but there are infinite ways to look at this thing because it’s such a philosophical question.”

The research on agency started with a simple demo performed at a 2018 conference by Jun Nishida, now a postdoctoral researcher at the University of Chicago:

Could electrical muscle stimulation help people catch a marker dropped by another person at short range?

It did, and as expected, most volunteers attributed the action to the machine, not their own reflexes. But a small minority of participants disagreed, saying that the stimulation, known as EMS, must not have been on because they caught the marker unassisted.

Those outliers inspired an experiment where Lopes, Nishida and Shunichi Kasahara of Sony Computer Science Laboratories adjust the timing of when EMS triggered a user to perform a particular task, such as hitting a button in response to a stimulus, or more advanced actions such as photographing a fast-moving baseball.

They found that there’s a window of time wherein it becomes very hard for users to distinguish between their own actions and those generated by EMS.

“If we do it very early on it will feel very artificial, because you do something completely superhuman: You hit a ball much faster or you grab the pen super early,” Lopes said.

“But if we would do the stimulation just a little bit before you would normally do it yourself, you might not even spot that this was the muscle stimulation and not you. You feel like you did it.”

In that study, subjects were asked verbally after the task whether they felt like they did it themselves or were helped by the EMS device. In a recent paper, Lopes went deeper, hearing directly from participants’ brains whether they felt agency by measuring its electrical activity during a virtual reality task.

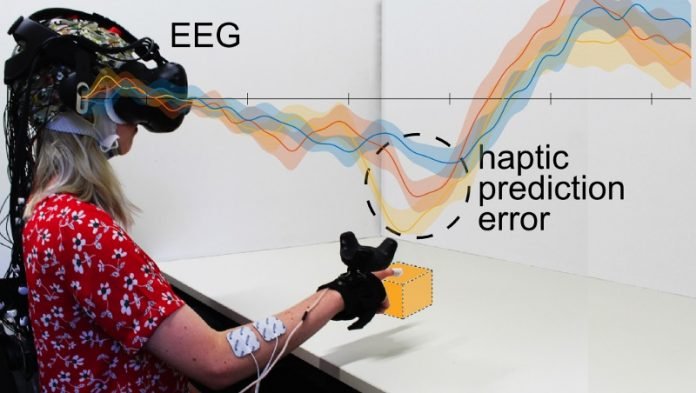

In a virtual reality environment, users were asked to touch a virtual box. Precisely when their fingers contacted the virtual box they received either visual feedback, or visuals accompanied by vibrations on their fingertips, or all of the above accompanied also by muscle stimulation.

But in a certain subset of trials, that feedback was “wrong,” occurring before the user appeared to touch the box. When this mismatch occurred, researchers saw a notable change in the brain’s electrical activity—a neural signal that the virtual reality experience was less realistic.

“Your brain has a pretty good detector for whether you feel like you’re there,” Lopes said. “It’s kind of dynamically measuring how you feel in those virtual worlds, even though you don’t have to report it.”

That project dovetailed with a concurrent collaboration between Lopes and neuroscientists at University College London & FU Berlin, who wanted to use EMS and fMRI to search for brain areas that distinguish between self-generated and externally-generated motion.

The question reflects the selective nature of touch, where some sensations, such as from your fingers while searching for a coin in your pocket, can be extremely sensitive while others, such as the motion of your arms to and from the pocket, are virtually imperceptible.

Lopes helped design a system where participants in an fMRI scanner were asked to flex their middle finger with or without the help of EMS, receiving a tactile stimulus that simulates touching a hard surface on half the trials.

This setup allowed researchers to tease apart the motor and sensory systems of the brain, and search for the brain area that potentially tunes these responses. The data pointed to a region called the posterior insular cortex, which responded strongly to touch during self-generated motion, but not during EMS stimulation.

“It suggests there’s actually a mechanism associated to touch that is checking for agency and regulating these inputs, which could explain why we can so quickly change between one thing to another, if it’s top-down,” Lopes said.

“That little process seems to know what’s going on.”

Zeroing in on this brain area and its electrical activity could guide engineers designing the next wave of EMS devices. Monitoring a user’s brain activity might help developers know, without asking, whether a virtual reality experience is perceived as real, so they can fine-tune the software accordingly.

More broadly, preserving a sense of agency in the user might make EMS more effective at teaching someone how to perform a physical action, such as improving a tennis stroke or playing an instrument.

“The next thing we’re trying to see is, using these precise timings that help you be a little bit faster but still provide you with a lot of agency, does this influence the way you would learn with such a system?” Lopes said.

“It wouldn’t dramatically improve you, because we know that if it’s too much change from your expectation, you’re not going to believe it. These smaller changes might make

Written by Rob Mitchum.