A new kind of super-sized computer chip could reshape how we train powerful AI systems—and make them more environmentally friendly too.

Engineers from the University of California, Riverside (UCR) have written a review in the journal Device exploring these “wafer-scale accelerators,” which are helping AI models run faster while using less energy.

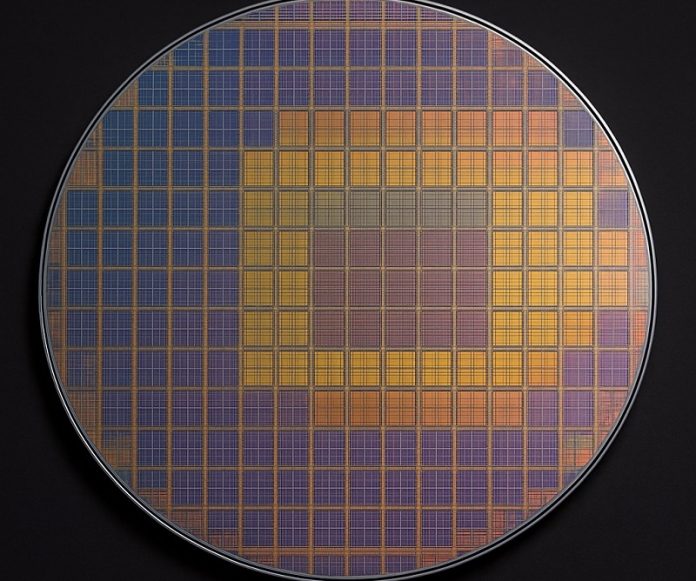

These chips, made by companies like Cerebras, are built on full silicon wafers about the size of a dinner plate.

That’s much bigger than the typical chips inside most AI systems today, like GPUs, which are only the size of a postage stamp.

The lead author, UCR professor Mihri Ozkan, says this technology is a big leap forward. With so much more space, wafer-scale chips can pack in more computing power without overheating or using excessive electricity—something we urgently need as AI models grow bigger and more complex.

Traditional GPUs became the go-to tool for AI because they can do thousands of things at once. They power everything from driverless cars to chatbots. But now, even the best GPUs are starting to reach their limits. They require lots of energy and cooling, and they struggle to move massive amounts of data quickly.

Wafer-scale chips like Cerebras’ WSE-3 change the game by keeping everything on one giant chip. That means no time or energy is lost sending data between separate chips. The WSE-3 chip has 4 trillion transistors and 900,000 AI-specialized cores, all in one place. Another chip, Tesla’s Dojo D1, has 1.25 trillion transistors and thousands of cores too.

These powerful chips also have energy-saving benefits. Because they reduce the distance data has to travel, they use far less electricity and produce less heat. For example, Cerebras says its chips use only one-sixth the power of equivalent GPU setups when doing the same tasks. That’s especially important as data centers, which run 24/7, use huge amounts of electricity and water just to stay cool.

Still, there are some trade-offs. Wafer-scale chips are expensive to make and aren’t the best choice for smaller, simpler jobs. GPUs will continue to be useful in many areas. But for training the largest AI models, these giant chips are becoming essential.

Cooling remains a challenge, as some chips can generate up to 10,000 watts of heat. Companies like Cerebras and Tesla have built special liquid cooling systems to manage this.

The UCR team also points out that most of a chip’s carbon footprint comes from manufacturing. They call for greener production methods and recyclable materials to reduce environmental impact from start to finish.

In the end, wafer-scale chips aren’t just about faster AI—they could also help us build a more sustainable digital future.