Engineering researchers at the University of Minnesota Twin Cities have developed a groundbreaking hardware device that could drastically reduce the energy consumption of artificial intelligence (AI) systems.

This innovative technology, which is featured in a research paper titled “Experimental demonstration of magnetic tunnel junction-based computational random-access memory” in the journal npj Unconventional Computing, promises to cut energy use by at least 1,000 times compared to current methods.

With the increasing demand for AI applications, scientists have been seeking ways to make these processes more energy-efficient while maintaining high performance and low costs.

Typically, AI systems consume a lot of power as they move data between logic (where information is processed) and memory (where data is stored).

This new device changes that by keeping data within the memory during processing.

The team at the University of Minnesota demonstrated a new model called computational random-access memory (CRAM), where data processing happens entirely within the memory array.

“This work is the first experimental demonstration of CRAM, where data can be processed entirely within the memory array without the need to leave the grid where a computer stores information,” said Yang Lv, a postdoctoral researcher and the lead author of the paper.

The International Energy Agency (IEA) forecasts that AI’s energy consumption could double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026, equivalent to the entire electricity consumption of Japan.

However, the new CRAM-based device could significantly cut this energy use. A CRAM-based machine learning inference accelerator is estimated to improve energy efficiency by about 1,000 times.

In some tests, the new method showed energy savings of 2,500 and 1,700 times compared to traditional techniques.

This breakthrough is the result of over two decades of research. Jian-Ping Wang, the senior author of the paper and a Distinguished McKnight Professor at the University of Minnesota, recalled how their initial idea of using memory cells directly for computing was considered “crazy” 20 years ago.

However, with a dedicated team of students and interdisciplinary faculty, they have now proven that this technology is feasible and ready for use.

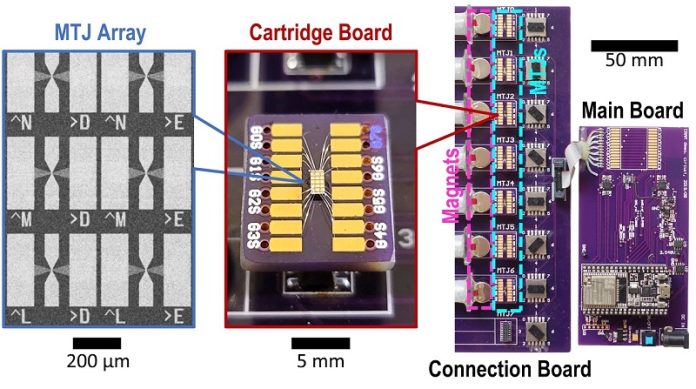

The CRAM technology builds on Wang’s earlier research into magnetic tunnel junctions (MTJs) devices.

These nanostructured devices, which improve hard drives and sensors, have been used in microelectronics systems, including Magnetic Random Access Memory (MRAM) found in microcontrollers and smartwatches.

CRAM architecture allows computation to happen directly within the memory cells, eliminating the need for energy-intensive data transfers. “As an extremely energy-efficient digital-based in-memory computing substrate, CRAM is very flexible in that computation can be performed in any location in the memory array,” said Ulya Karpuzcu, an associate professor at the University of Minnesota and co-author of the paper.

The team plans to collaborate with semiconductor industry leaders to demonstrate the technology on a larger scale and advance AI functionality. This new device could be a game-changer for AI, making it much more energy-efficient and cost-effective.