Macquarie University researchers have recently overturned a long-standing theory from the 1940s on how humans pinpoint the origin of sounds.

Their findings suggest that simpler, more adaptable technologies might be developed to improve hearing aids and smart devices.

Traditionally, it was believed that humans have specialized brain detectors solely for locating sounds, an idea based on the precise timing of sound reaching our ears.

However, the new study, published in Current Biology, reveals that humans actually use a more general and efficient neural network for this task, much like smaller mammals such as gerbils and guinea pigs.

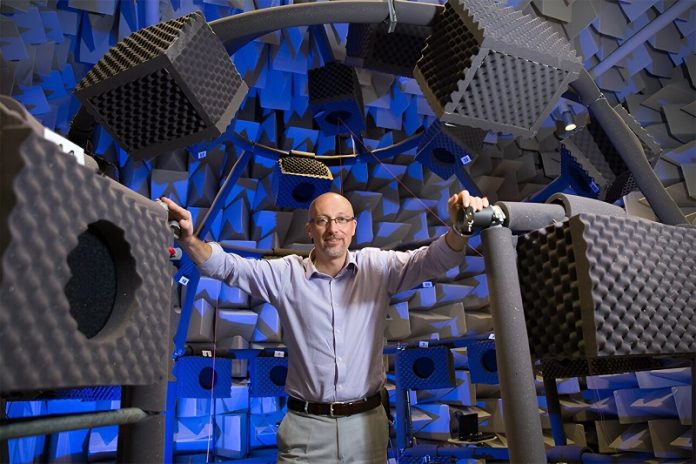

Professor David McAlpine, a distinguished hearing expert at Macquarie University, and his team utilized advanced hearing tests and brain imaging techniques to demonstrate that humans, along with other mammals like rhesus monkeys, employ these simpler networks.

This discovery challenges the notion that human brains are exceptionally advanced in this respect.

“This finding reminds us that our brains are not as uniquely sophisticated as we might think,” explains Professor McAlpine.

He describes our efficient neural circuitry as our “gerbil brain,” highlighting its simplicity and effectiveness.

Moreover, this same network is responsible for distinguishing speech from background noise.

This insight is crucial as it affects the design of hearing devices and virtual assistants, which often struggle to isolate individual voices in noisy environments—a dilemma known as the “cocktail party problem.”

Professor McAlpine suggests that current technology, which uses large language models (LLMs) to predict and process speech, may be overcomplicated. Instead, he advocates for a simpler approach based on how various animals, including humans, use basic sound snippets to identify and locate sound sources.

This method does not rely on reconstructing a complete audio signal but focuses on how our brains represent these sounds early in the processing stage.

“Our findings indicate that sophisticated language processing isn’t necessary for effective listening. We just need the basic, efficient processing akin to a gerbil’s brain,” he adds.

The implications of this research are significant, potentially leading to the development of more efficient and adaptable hearing aids and smarter, more responsive virtual assistants.

The team’s next steps involve determining the least amount of sound information needed to maximize our ability to discern where it comes from, paving the way for innovations in audio technology that could simplify and enhance how we interact with our environment.