In the digital world we live in, it’s getting harder to tell if the information we read online is created by humans or by computers.

This is especially true with the rise of advanced computer programs, known as large language models (LLMs), which can write text that sounds very human-like.

But, researchers at Columbia Engineering have made a big step forward in solving this puzzle.

Professors Junfeng Yang and Carl Vondrick, along with their team, have created a new system called Raidar.

This tool is designed to figure out if a piece of text, like a tweet, a review, or a blog post, was written by a person or generated by a computer.

They’re planning to share all about Raidar at a big tech conference in Vienna, Austria, and they’ve even made their research available online for everyone to see.

The cool part about Raidar is how it works.

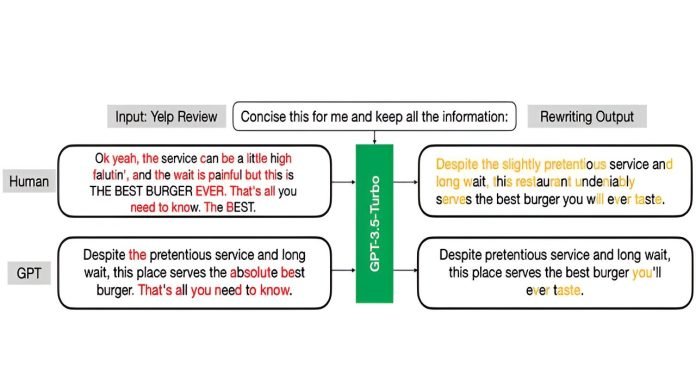

The researchers noticed that computer-generated texts are often seen by other computer programs as already perfect, so when asked to edit or rewrite them, the programs don’t make many changes.

On the other hand, texts written by humans usually get more edits because the programs see more room for improvement.

So, Raidar uses this insight to test texts. It asks another LLM to rewrite the text in question. Then, it looks at how much the text has been changed.

If there are a lot of edits, Raidar thinks a human likely wrote the original text. If not many changes are made, it suggests the text might have been computer-generated.

What’s amazing is how well Raidar performs. It’s much better at detecting computer-written texts than previous methods, and it works well even with short pieces of text.

This is a big deal because a lot of the information we share and see online, like social media posts or comments, is quite brief but can still have a big impact on what we think or believe.

Being able to tell if text is written by humans or computers is really important for keeping trust and accuracy online.

Whether it’s a news article, an essay, or a product review, knowing that the information comes from a real person and not a computer trying to mislead us or spread misinformation is crucial.

The lead researcher, Chengzhi Mao, emphasizes how important this tool is for maintaining the integrity of digital content.

As computers get better at creating realistic texts, having a way to check the authenticity of digital information becomes more and more essential.

The Columbia team isn’t stopping here, though. They want to make Raidar even better by testing it on texts from different areas, in multiple languages, and even on computer code.

They’re also looking into ways to detect computer-generated images, videos, and audio, aiming to create a comprehensive toolset for identifying AI-created content across the board.

In a world where AI’s capabilities continue to grow, Raidar represents a beacon of hope for preserving the trustworthiness and reliability of the information that shapes our society and our beliefs.