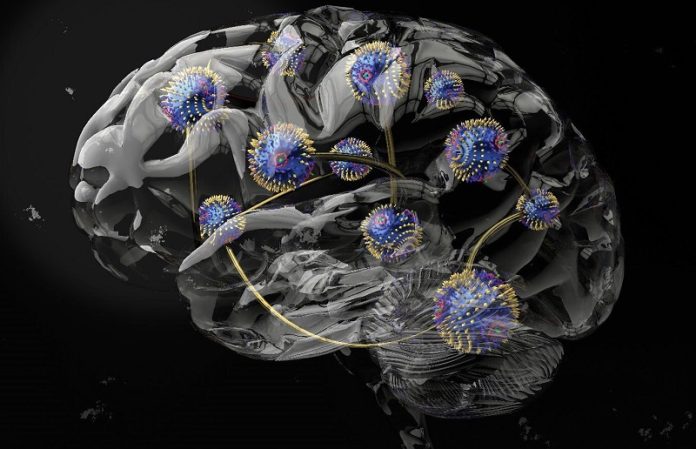

Researchers from UCL and Imperial College London have made a big leap in creating a new kind of computing that’s inspired by how our brains work.

Their study, published in Nature Materials, shows how using twisted magnets can make computers more adaptable and energy-efficient.

This kind of computing is called “physical reservoir computing.” It’s special because it uses the natural properties of materials to process data, which can save a lot of energy. Until now, though, this approach had a limitation: it wasn’t very flexible. A material might be good for some computing tasks but not others.

Dr. Oscar Lee, the lead author of the study, explains that their work is bringing us closer to computers that use much less energy and can adjust their abilities to do different tasks well, just like our brains do. The next step is to find materials and designs that are practical and can be produced on a large scale.

Traditional computers use a lot of electricity. This is partly because they have separate parts for storing and processing data, and moving information between these parts uses extra energy and creates heat. This is a big issue, especially for machine learning, which involves large amounts of data. Training just one big AI model can produce as much carbon dioxide as hundreds of tons.

Physical reservoir computing, a brain-inspired approach, aims to solve this problem. It combines memory and processing in a way that’s more energy-efficient. It could also be added to existing computer systems to give them new, energy-saving abilities.

In their study, which included teams from Japan and Germany, the researchers used a special tool to measure how much energy chiral magnets absorbed under different magnetic fields and temperatures, ranging from very cold (-269 °C) to room temperature.

They discovered that these twisted magnets behaved differently under various conditions and were good at different types of computing tasks. For example, in the skyrmion phase (where particles swirl like a vortex), the magnets had a strong memory capacity, which is great for tasks like predicting future events. In the conical phase, the magnets had less memory but were better at tasks like transforming data or identifying objects (like telling if an animal is a cat or a dog).

Dr. Jack Gartside from Imperial College London mentioned that their collaborators at UCL, led by Professor Hidekazu Kurebayashi, had found promising materials for this kind of computing. These materials can support a wide range of magnetic patterns.

Working with Dr. Oscar Lee, the team at Imperial College London designed a computing architecture that takes advantage of these complex material properties. This design showed excellent results, demonstrating how changing the physical state of the materials could directly improve computing performance.

This research, which also involved experts from the University of Tokyo and Technische Universität München, represents a significant step towards more efficient and adaptable computing, moving closer to the way our brains process information.