The human brain is an amazing thing.

It has about 80 billion neurons, or nerve cells, that talk to each other using connections called synapses.

Unlike a laptop, which has a central processor, our brain does many calculations all at once and compares the results.

We don’t understand everything about how the brain works yet, but we can use what we do know to make our computers work more like it.

One way to do this is through spiking neural networks (SNNs). These are computing systems designed to work like our brains.

In SNNs, information is processed in little bursts of activity called spikes, just like in our brains when a neuron fires. One cool thing about SNNs is that they process spikes as they happen, instead of waiting for a bunch of them to pile up and then dealing with them all at once.

This lets SNNs react to changes faster and does certain types of calculations more efficiently than normal computers.

SNNs can also do some things that normal computers can’t, like processing information over time and changing the strength of their connections based on when their spikes happen.

This learning technique is called spike-timing-dependent plasticity (STDP) and it’s a type of Hebbian learning. In simple terms, Hebbian learning is the idea that “Cells that fire together wire together”, which means that neurons that are active at the same time tend to strengthen their connections.

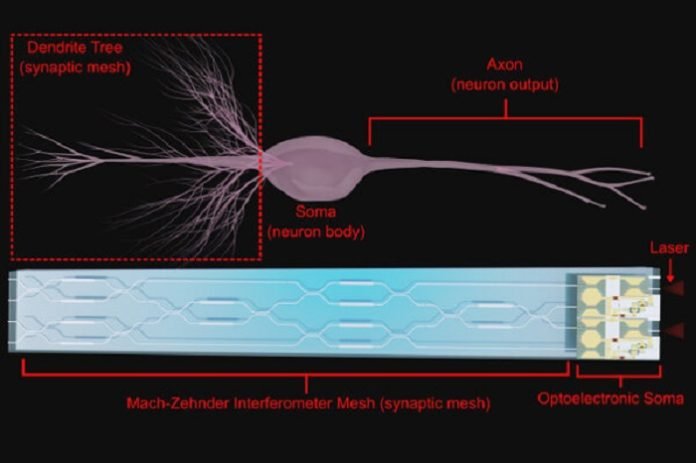

A recent scientific paper has described a new SNN device that uses both light (optical) and electrical parts.

They’ve designed special optoelectronic neurons that can accept information from a light-based communication network, process it with electrical circuits, and send information back using a laser. They’ve shown that this setup can transfer data and communicate between systems faster than traditional electronic-only systems.

They also use some pretty smart algorithms, such as Random Backpropagation and Contrastive Hebbian Learning, to make their system learn like a brain. This gives their system a big boost in performance compared to traditional machine learning systems that use backpropagation.

So, what are these SNNs good for? Well, they’re great for tasks that need to process data continuously over time, like handling signals in real-time.

They can also have multiple types of memory that work over different lengths of time, just like we have working, short-term, and long-term memory. For instance, SNNs can be used to control a robotic arm that can adapt as it wears down.

In the future, we might see SNNs used for more complex tasks, like processing live audio and understanding natural language for voice assistants, providing live captions, or separating different sounds.

They could also be used for processing live video and 3D data from lidar (a type of radar that uses light instead of radio waves) in self-driving cars or security systems. The possibilities are truly exciting!