Lighting can make or break a photo.

Whether you’re snapping a portrait or shooting a movie scene, the way light hits the subject plays a huge role in how the final image feels.

Photographers and filmmakers spend hours—and often a lot of money—trying to get the lighting just right. But once a photo is taken, changing the lighting afterward has always been tricky.

It’s a process called “relighting,” and traditionally it’s slow, technical, and requires skilled artists to do it manually.

Now, researchers at Simon Fraser University (SFU) in Canada have created a new tool that could make photo relighting far more accessible, flexible, and realistic.

Unlike AI tools that try to guess how to change the light in a photo using massive datasets, this method gives users direct control over how light behaves in an image—just like they would have if they were shooting in a real studio.

This new research, titled Physically Controllable Relighting of Photographs, will be presented at the prestigious SIGGRAPH 2025 conference in Vancouver.

It was developed by the Computational Photography Lab at SFU, led by Dr. Yağız Aksoy.

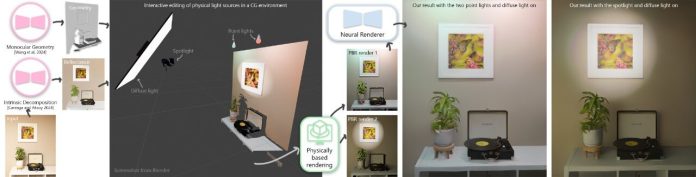

The lead author, Ph.D. student Chris Careaga, explains that their method works by first creating a 3D version of the photo. This 3D model captures the shapes and surface colors of the scene, but importantly, it does not include the original lighting.

Once this virtual 3D scene is built, the user can place digital light sources wherever they like, just as if they were using lighting equipment in a photography studio or designing a scene in 3D software like Blender or Unreal Engine.

These virtual lights are then simulated using techniques borrowed from computer graphics, creating a rough preview of how the photo would look with the new lighting.

Of course, this early preview isn’t photorealistic—it’s more like a sketch. That’s where the team’s neural network comes in. It takes the preview and transforms it into a polished, realistic image that looks like it was shot with the new lighting setup in real life.

What makes this tool especially exciting is that it doesn’t rely on vague AI guesses or trial-and-error prompts. Instead, it gives artists and photographers full control over where lights go, how strong they are, and what kind of effect they create.

It’s a level of creative freedom that existing generative AI tools often can’t offer.

Right now, the system only works with still photos, but the SFU team is working on expanding it to video. That would make it a game-changer for filmmakers, especially those who don’t have big budgets for gear or reshoots. Imagine being able to fix lighting in post-production without needing to film the whole scene again.

Dr. Aksoy says this technology could save time and money for creators while keeping their artistic vision intact.

It’s part of a larger series of projects from the lab focused on “illumination-aware” editing tools. Their earlier work on breaking down images into lighting and material layers helped lay the foundation for this new system.

More details and an explainer video can be found on the Computational Photography Lab’s website.