As artificial intelligence (AI) continues to generate images that are nearly impossible to tell apart from real photos, digital watermarks have been proposed as a way to identify AI-generated content.

These watermarks—hidden digital marks embedded in the image—are meant to help us tell what’s real and what’s synthetic.

A special type called semantic watermarking is built deep into the AI image generation process and has been considered nearly impossible to remove or fake.

But researchers from Ruhr University Bochum in Germany have now proven that these supposedly secure watermarks can, in fact, be manipulated with surprising ease.

Presenting their findings at the CVPR 2025 conference in Nashville, the team revealed two simple but powerful techniques that allow attackers to either remove or fake semantic watermarks.

Their study is also available on the arXiv preprint server.

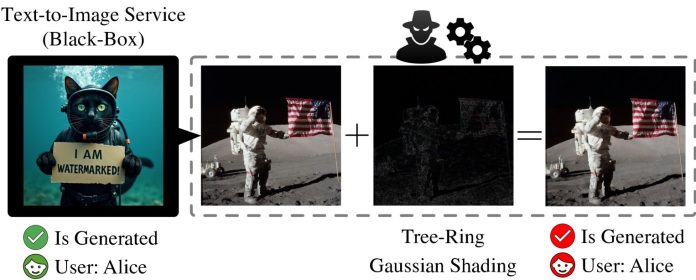

The first technique, called the “imprinting attack,” works by changing the underlying digital signature—called the latent representation—of a real photo.

This process makes the image appear as if it has the same watermark as an AI-generated image. In other words, someone could take a genuine photo, add a fake watermark, and make it look as if it was created by AI. This could be used to mislead others or discredit authentic content.

The second technique is known as the “reprompting attack.” Here, a watermarked image is taken and sent back through the AI’s internal system, where it can be used to generate a new image with a different subject—but it still carries the original watermark. This means the watermark no longer proves where the image came from or what it originally showed. It can be reused in entirely unrelated content.

What makes these attacks especially worrying is that they work across different types of AI systems. Whether the image was made using older models or the latest technology, both types of attacks still succeed. All that’s needed is one image that already contains a watermark.

The researchers warn that this could have major consequences for the future of digital trust. If watermarks can be so easily faked or reused, then our ability to verify whether an image was made by AI breaks down. This creates serious risks for journalism, online safety, and even national security.

Dr. Andreas Müller, one of the study’s authors, says the current methods of watermarking need to be completely rethought. Without a better way to mark and authenticate AI-generated content, it may become impossible to know what’s real in the digital world.