Fish are incredible team players.

When they swim in schools, there’s no leader giving orders, yet each fish knows how to stay in formation, avoid bumping into others, and move with amazing flexibility.

This natural coordination has fascinated scientists and engineers for years, especially those trying to build robot swarms that can work together just as smoothly.

Now, researchers in Konstanz, Germany, have made a major breakthrough by using virtual reality (VR) to study how fish manage this complex behavior.

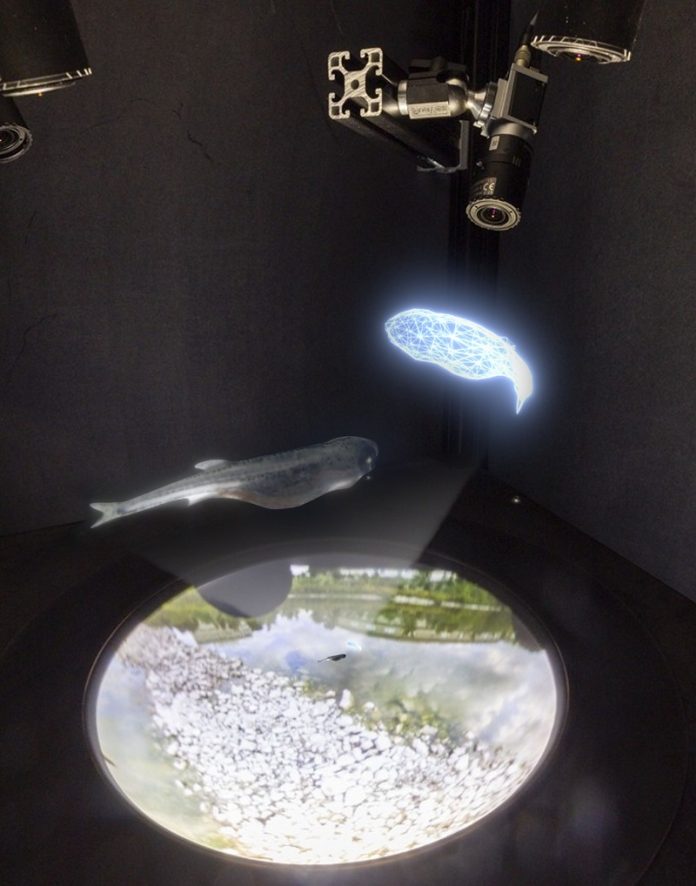

The study, recently published in Science Robotics, involved placing juvenile zebrafish in high-tech arenas where they could swim freely while interacting with virtual “holographic” fish.

These virtual fish were actually real fish from other arenas, connected through a shared VR environment.

This setup allowed fish to swim and respond to each other in real time, as if they were all in the same tank.

This special VR setup gave scientists a rare chance to carefully control what each fish saw and experienced.

By doing so, they could figure out what visual cues the fish used to coordinate their movements.

Instead of just guessing how fish interact, the team was able to “reverse-engineer” the rules fish follow while swimming in groups.

The surprising discovery was that the fish don’t rely on complex information. They mainly follow their neighbors based on where they appear in their field of vision, not how fast they’re moving. In other words, just knowing the position of another fish is enough to coordinate effectively.

To test how realistic this rule was, the researchers created a virtual fish that sometimes followed the real fish using the discovered rule and sometimes acted like a real fish.

The real fish couldn’t tell the difference—they responded the same way either way. This “aquatic Turing test” showed just how convincing and effective the simple rule was.

But the study didn’t stop with fish. The researchers took the same rule and used it to guide swarms of robots—cars, drones, and boats.

These robots were asked to follow a moving target, and their performance was compared to robots using a popular and complex method called Model Predictive Controller (MPC). The fish-inspired method performed nearly as well as MPC, but it was much simpler and used less computing power.

This research shows how much we can learn from nature. By studying how animals solve complex problems in simple ways, scientists can design better, more efficient robots.

As the researchers explain, it’s a two-way street—robots help us understand biology, and biology inspires better robots.