An international team of researchers has discovered serious flaws in current deepfake detection technologies, raising concerns about the reliability of tools used to spot AI-generated fake images, videos, and audio.

Their study, conducted by Australia’s national science agency CSIRO and South Korea’s Sungkyunkwan University (SKKU), found that none of the 16 leading deepfake detectors tested could consistently detect real-world deepfakes. The findings were published on the arXiv preprint server.

Deepfakes are AI-generated synthetic media that can convincingly manipulate videos, images, and voices to create false content.

While this technology has some positive uses, it also poses serious risks, including misinformation, fraud, and privacy violations.

According to Dr. Sharif Abuadbba, a cybersecurity expert at CSIRO, the rapid improvement of generative AI has made deepfakes cheaper, easier, and more convincing than ever before.

“Deepfakes are becoming harder to detect, which makes it easier for them to spread false information. We need better detection tools that can adapt to new threats,” Dr. Abuadbba said.

Why are current detectors failing?

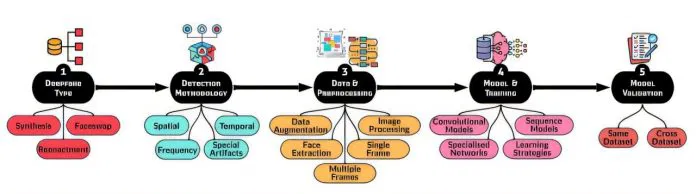

The researchers developed a five-step framework to assess deepfake detection tools.

Their tests revealed 18 different factors that impact accuracy, such as how data is processed, how detection models are trained, and how they are tested.

One key issue is that many deepfake detectors only work well on specific types of fake content. For example, a widely used detector called ICT (Identity Consistent Transformer) was trained on celebrity faces.

However, it performed poorly when tested on deepfakes featuring non-celebrities, showing that detection tools can struggle when faced with new or unfamiliar deepfake content.

Dr. Kristen Moore, another CSIRO cybersecurity expert, emphasized the need for more advanced detection strategies. She suggested that future tools should combine multiple detection methods rather than relying only on image or audio analysis.

“We are working on models that integrate audio, text, images, and metadata for better accuracy,” Dr. Moore said.

Another promising approach is fingerprinting techniques, which track the origins of deepfakes to help identify them more effectively. Researchers also recommend using larger and more diverse datasets, including synthetic data, to train detection tools.

As deepfake technology continues to evolve, the challenge of detecting fake content will only grow. However, this research provides valuable insights into how detection tools can be improved to stay ahead of increasingly realistic deepfakes.

By developing smarter, more adaptable detection systems, scientists hope to better protect the public from the dangers of deepfake misinformation and fraud in the future.