Robots are getting smarter!

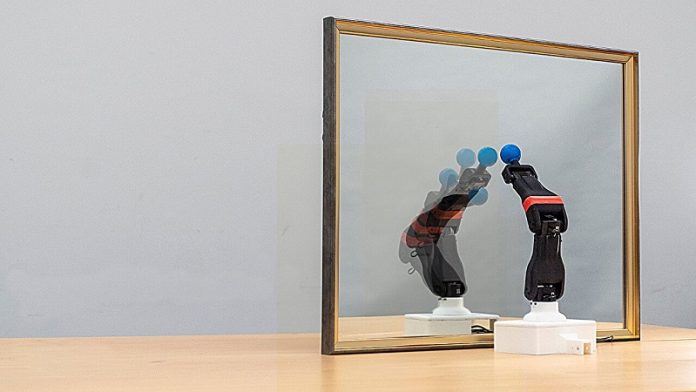

A new study from Columbia Engineering shows that robots can now learn how to move by simply watching themselves in a mirror.

This means they can understand their own bodies, plan movements, and even fix mistakes if they get damaged.

The research, published in Nature Machine Intelligence, was led by Yuhang Hu, a PhD student at Columbia University’s Creative Machines Lab, under the guidance of Professor Hod Lipson.

The goal? To create robots that don’t need constant human programming but can instead figure things out on their own.

How do robots learn about themselves?

Traditionally, robots first learn movement in virtual simulations before being tested in the real world.

However, designing these simulations takes a lot of effort and expertise. The new study offers a different approach—letting robots observe their own motion using a simple camera.

By watching video footage of themselves, robots can build a digital model of their own shape and movement. This is possible thanks to deep learning, a type of artificial intelligence inspired by the human brain. The AI processes the 2D video and converts it into a 3D understanding of the robot’s body.

Why is this important?

One major advantage of this method is that robots can adapt to changes or damage.

For example, imagine a robot vacuum that accidentally bends one of its arms. Instead of breaking down or needing repairs, it could recognize the problem, adjust its movement, and keep working.

This self-awareness is also useful in factories. If a robot arm used in car production is knocked out of alignment, it could detect the issue, tweak its movements, and continue working instead of shutting down. This could save companies time and money.

A step toward more independent robots

This breakthrough is part of a long journey to make robots more self-sufficient. In 2006, Columbia researchers developed robots that could create simple stick-figure models of themselves.

A decade later, they improved, using multiple cameras for more detailed self-modeling. Now, with just a single camera and a short video clip, robots can fully understand their own movement—a concept called Kinematic Self-Awareness.

Professor Lipson believes that this ability brings robots closer to true independence. “Once a robot can imagine itself and predict the results of its actions, there’s no limit to what it can do,” he says.

This research could lead to smarter, more reliable robots that can take care of themselves—just like humans!