Imagine sitting in a noisy office or a bustling restaurant, wearing headphones that let you clearly hear the people nearby while tuning out background noise.

Thanks to researchers at the University of Washington, this futuristic idea is now a reality.

They’ve developed a headphone prototype that creates a “sound bubble,” allowing the wearer to hear people within a radius of 3 to 6 feet while quieting noises from farther away.

This innovation uses artificial intelligence (AI) to control the bubble.

Sounds from outside the bubble are suppressed by an average of 49 decibels—similar to the difference between a vacuum cleaner and the sound of rustling leaves—even if those distant noises are louder than the ones nearby.

The technology is detailed in the journal Nature Electronics, and the team is working on turning it into a commercial product.

“Humans aren’t great at figuring out how far away a sound is, especially in noisy places like restaurants,” said Shyam Gollakota, a professor at the University of Washington.

“Our AI system can calculate the distance of each sound in a room and process this information in real-time, within just 8 milliseconds.”

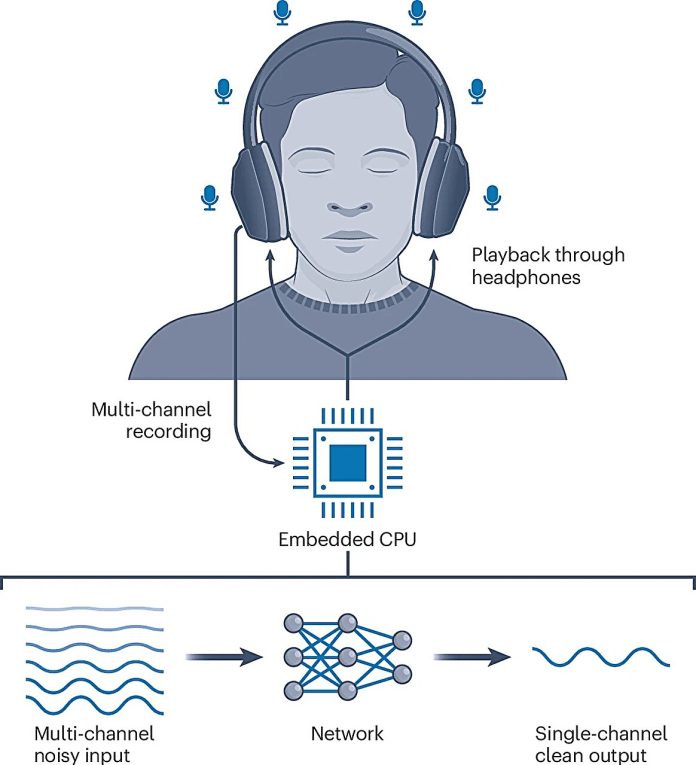

To create the prototype, the researchers modified existing noise-canceling headphones by attaching six tiny microphones to the headband.

These microphones capture sound, and an AI algorithm running on a small onboard computer calculates when each sound reaches the microphones. This data helps the system distinguish sounds based on their distance.

Sounds within the bubble are amplified slightly for clarity, while those outside the bubble are silenced.

The innovation differs from features on devices like Apple’s AirPods Pro 2, which focus on amplifying sounds from specific directions based on head movements. In contrast, the sound bubble technology works regardless of head position and can amplify multiple voices at once.

To train the system, the team needed to collect real-world data on sound distances. Since such a dataset didn’t exist, they built one using a mannequin with headphones.

A robotic platform moved the mannequin’s head while a speaker played sounds from different distances.

The researchers tested their system in 22 indoor environments, including offices and living rooms, and found it works well because of two key factors: how the head reflects sounds and how different sound frequencies behave over distance.

Currently, the technology only works indoors, as outdoor sounds are harder to process. The team’s next steps include adapting the system for smaller devices like hearing aids and earbuds, which will require new microphone setups.

This breakthrough could make noisy environments much easier to navigate, whether for conversations, work, or simply enjoying a quiet moment in a chaotic world.