Using data from the Zwicky Transient Facility, Southern California high school student Vanya Agrawal creates new “space music.”

In September 2023, Vanya Agrawal, a senior at Palos Verdes High School, was searching for a science research project.

“I’ve been interested in music since I was very young, and, over the past few years, I’ve also become interested in physics and astronomy,” Agrawal says.

“I was planning on pursuing both as separate disciplines, but then I began to wonder if there might be a way to combine the two.”

Enter data sonification. Just as researchers design graphs or diagrams or scatterplots to create a visual mapping of their data, they may also develop an audiomapping of their data by rendering it as sound. Instead of drawing a dot (or any other visual symbol) to correspond to a point of data, they record a tone.

Granted, this is highly unusual in scientific research, but it has been done. Her curiosity piqued, Agrawal soon found examples of these sonifications. For example, in 1994, an auditory researcher, Gregory Kramer, sonified a geoseismic dataset, resulting in detections of instrument error, while in 2014 the CEO and co-founder of Auralab Technologies, Robert Alexander, rendered a spectral dataset into sound and found that participants could consistently identify wave patterns simply by listening.

Do these scientific sonifications make you want to sit yourself down in a concert hall to be swept away by the music they create?

Well, when you see a scatterplot of supernovae in an astrophysics journal, do you think, “What is that doing in an academic journal? It belongs on the wall of a museum!” Probably not often.

Here is where the artistic effort comes in: representing scientific information in ways that delight the eye or the ear. This was Agrawal’s goal, using an astrophysical dataset to make music that could draw in nonscientific audiences and help them to engage with new discoveries about the universe.

Agrawal first approached Professor of Astronomy Mansi Kasliwal (PhD ’11), a family friend, to see about finding an appropriate dataset to sonify. She was quickly put in touch with Christoffer Fremling, a staff scientist working with the Zwicky Transient Facility (ZTF) team. Using a wide-field-of-view camera on the Samuel Oschin Telescope at Caltech’s Palomar Observatory, ZTF scans the entire sky visible from the Northern Hemisphere every two days, weather permitting, observing dynamic events in space.

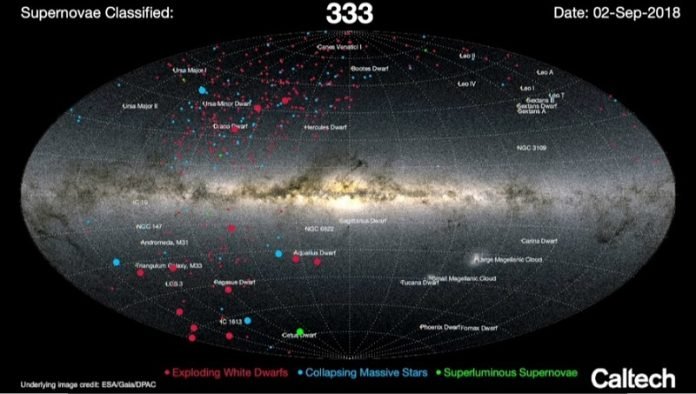

Many of the dynamic events observed by ZTF are supernovae, the explosions of dying stars. In the dataset Agrawal received from Fremling of supernova observations from March 2018 to September 2023, there were more than 8,000 of these. She decided that each supernova detection would be one note in the music she was composing.

“I knew the things that I could modify about the music were when the note occurred, its duration, its pitch, its volume, and the instrument that played the note,” Agrawal says. “Then it was a matter of looking at the parameters measured in the dataset of supernova observations and deciding which were most significant and how they should be matched up to musical features.”

With Fremling’s input, Agrawal decided that the five measurements associated with supernova observations that she would sonify would be discovery date, luminosity, redshift (a quantifiable change in the wavelength of light indicating the light source’s distance from us), duration of explosion, and supernova type.

“Discovery date of a supernova has an obvious correlation with the time in which its associated note appears in the music,” Agrawal says, “and matching the duration of a supernova with the duration of the note and the type of supernova with the type of instrument playing the note also made the most sense.” As for the remaining parameters, Agrawal “flip-flopped back and forth with redshift and luminosity, which would go with pitch or volume. But I ultimately decided on having the luminosity correlate to volume because you can think of volume as the auditory equivalent to brightness. If something emits a dim light, that’s like a quiet sound, but if it emits a bright light, that correlates to a loud sound. That left redshift to be translated into pitch.”

Once parameters had been translated, the pitch values were modified to enhance the sound. Redshift had to be condensed into a tight range of pitches such that the result would be in the most audible range for human ears.

The initial result, according to Agrawal, was less than euphonius. Fremling, who had tried his own hand at setting down sounds in relationship to each supernova, had the same result: The music, he said, “did not sound good at all.”

“I don’t think I realized how many notes 8,000 actually is,” Agrawal says. “I was definitely picturing it to be a lot slower and more spread out, but after converting the data to sound I heard how densely packed the notes were.”

To achieve a sparser texture, Agrawal slowed the tempo of the sound file, extending its length to about 30 minutes, and then set about manipulating and enhancing the musicality of the piece. To ensure that the music would evoke outer space, Agrawal rounded pitches to fit into what is known as the Lydian augmented mode, a scale that begins with whole tones which, Agrawal says, “feel less settled and rooted than ordinary major or minor scales.

This resembles the scales in sci-fi music, so I thought it would be beneficial for representing the vastness of space.” Agrawal then added a percussion track, a chord track that harmonized dominant pitches in the dataset, and effects such as the sound of wind and distorted chattering.

“There is an element of subjectivity in this,” Agrawal says, “because, of course, the music isn’t what space actually sounds like, even before I began adding musical tracks. It’s my interaction with the universe, my interpretation of it through sound.

I would find it interesting to hear how other people sonify the same data, how they interact with the same universe.”

Agrawal’s composition has already been published on the ZTF website, along with a short video of supernova discoveries that uses portions of Agrawal’s composition for background music.

But Agrawal’s imagination reaches well beyond her first composition: “Obviously the parameters will be different for every dataset, but this type of sonification can be done with any dataset.

And with the right algorithms, sonifications can be created automatically and in real time. These compositions could be published on streaming services or played within planetariums, helping astrophysics discoveries to reach wider audiences.”

Until those algorithms come along, Fremling, Agrawal, and the outreach coordinator of ZTF have created the resources and tutorials needed to enable anyone to sonify ZTF datasets. The aim is to build a library of sonifications that can be offered to educators, artists, science engagement centers, astronomy visualization professionals, and more to improve and enrich accessibility to science. All resources are available.

Of course, new data will come along to shift our perspective on supernovae, and as a consequence, musical compositions featuring them will change too. “Just within the last year or two we have found a new type of supernova, even though people have been studying supernovae since the 1940s and 1950s,” Fremling says. Agrawal will need to introduce another instrument into her orchestra.

Also, supernova data can be interpreted in different ways. For example, Fremling notes, “some types of supernovae are inherently always very similar in absolute luminosity. The only reason their luminosity varies in the dataset—which Agrawal has translated into volume in her composition—is because these supernovae are occurring at different distances from our observatory at Palomar.”

Agrawal is bound for Washington University in St. Louis in fall 2024, planning to double major in music and astrophysics.

Written by Cynthia Eller.