In a groundbreaking development from Simon Fraser University (SFU) in Canada, researchers have unlocked the power to transform ordinary 2D photos into fully editable, realistic 3D models using just your smartphone.

This leap forward in technology means we’re moving beyond flat images to capturing moments in three dimensions, offering endless possibilities for creativity and interaction.

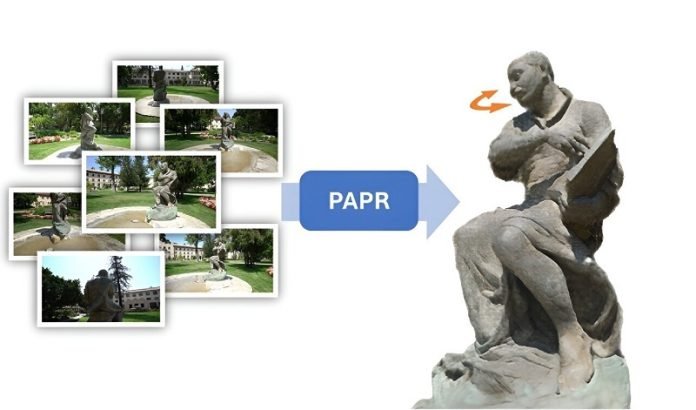

The researchers have introduced a new AI technology called Proximity Attention Point Rendering (PAPR).

This innovative approach lets you take a series of pictures from different angles and magically turns them into a 3D model that looks exactly like the real object.

You can then tweak and change this model just like you would edit a photo, but with the added dimension of depth.

Imagine walking around a statue, taking photos from various angles, and then, with a few taps on your phone, creating a 3D model of it. You can change its shape, adjust its appearance, and even make it move.

For example, the researchers demonstrated how they made a statue appear to turn its head, bringing it to life in a video as if it were actually moving.

Dr. Ke Li, a leading figure in this research, sees this as just the beginning of a new era where 3D creations become as common as photos and videos are today. The challenge up to now has been finding a way to easily edit 3D models.

Traditional methods were either too rigid or too complex, making it difficult for everyday users to play with 3D designs.

The breakthrough came when the team imagined treating each point in the 3D model not just as a part of the object but as a handle or control point.

Moving one of these points changes the model in a way that feels natural and intuitive, much like moving a puppet. This approach opens up a whole new world of editing 3D models with ease.

Creating a mathematical model that could do this wasn’t easy. The team developed a machine learning model that learns how to interpolate, or fill in the gaps, between points in a 3D space. This allows for smooth and automatic adjustments to the model as you edit.

This remarkable achievement was recognized at the 2023 Conference on Neural Information Processing Systems (NeurIPS) in New Orleans, Louisiana, where their paper was spotlighted—a prestigious honor given to the top 3.6% of submissions.

Looking ahead, Dr. Li and his team are excited about the potential applications of PAPR. Beyond just animating statues, they are exploring ways to capture and model entire moving scenes in 3D.

The implications for this technology are vast, from enhancing virtual reality experiences to creating more immersive storytelling mediums.

This innovation represents a significant step forward in making 3D modeling not just a tool for professionals but something anyone can enjoy and use, bringing a new dimension to how we capture and share our world.