In an exciting development, Professor Jiyun Kim and his team from the Department of Material Science and Engineering at UNIST have created a groundbreaking technology that can recognize human emotions as they happen.

This new tech could change the game for wearable devices, making it possible for gadgets to understand how we feel and respond accordingly.

Understanding emotions has always been tricky because feelings, moods, and emotional states are complex and can be hard to pin down.

The team tackled this challenge by developing a system that looks at both what people say and how they say it, capturing a full picture of human emotions.

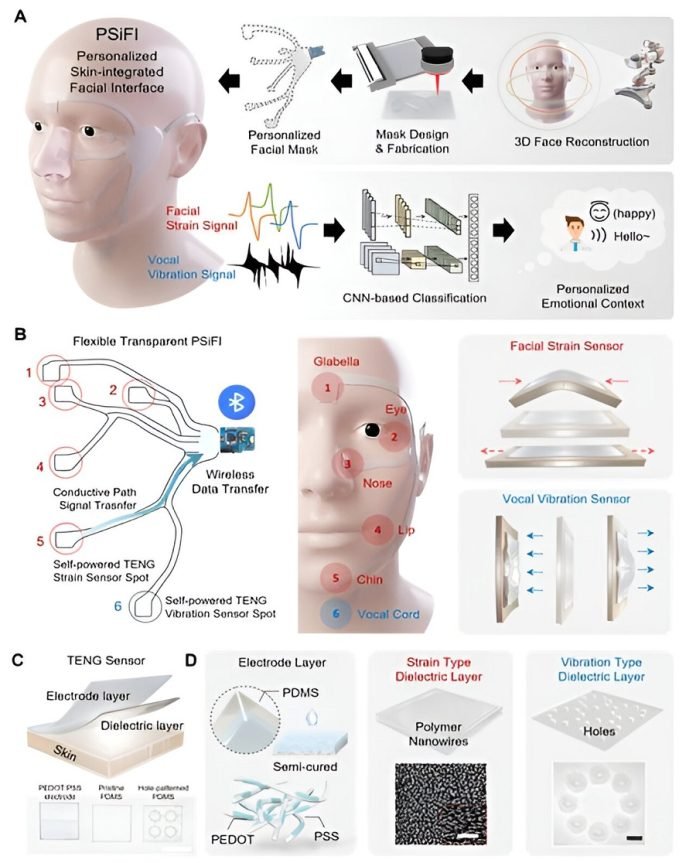

The heart of this innovation is a special wearable device called the personalized skin-integrated facial interface (PSiFI).

This device is unique because it’s self-powered, flexible, and clear, and it can pick up on both the tiny movements of your face and the sound of your voice at the same time.

It doesn’t need any external power or complicated equipment to work because it generates its own energy through the movement and sound it captures.

What makes it even cooler is that this device can understand emotions in real-time, even if someone is wearing a mask.

It uses machine learning, a type of artificial intelligence, to accurately figure out what emotion a person is feeling based on the data it collects.

The team has even shown how this can work in a virtual reality setting, acting as a digital helper that can suggest services based on how the user is feeling.

The PSiFI system works by using something called “friction charging,” where it creates energy from the friction of movement and sound.

The device is custom-made for each individual, using a special technique to make a clear conductor for the sensors and a personalized mask that fits perfectly, combining flexibility, transparency, and stretchiness.

This technology isn’t just about recognizing smiles or frowns; it integrates the detection of facial muscle movements and voice vibrations to pinpoint emotions accurately in real-time.

This breakthrough has been showcased in a virtual reality application, where the device can offer personalized recommendations in smart homes, private theaters, and smart offices, all based on the user’s emotions.

Jin Pyo Lee, who played a key role in the study, explained that their system makes real-time emotion recognition possible with minimal setup and training. This opens the door to portable emotion-sensing devices and a new era of digital services that respond to how we feel.

During their experiments, the team successfully collected data on facial and voice expressions, showing high accuracy in emotion detection with just a little bit of training. Its wireless and customizable nature makes it an ideal companion for everyday wear.

By applying this system in virtual reality, they’ve taken the first steps toward creating environments that can adapt to our emotional state, offering song, movie, or book recommendations that suit our mood.

Professor Kim believes that for gadgets and machines to interact effectively with humans, they need to understand the complex information humans convey, including emotions.

This research, published in Nature Communications, demonstrates the huge potential of using emotional data in next-generation wearable devices and services.