Advancements in robotics and artificial intelligence have paved the way for more efficient teleoperation, where robots are remotely controlled to perform tasks in distant locations.

However, existing teleoperation systems tend to be limited to specific settings and robots, restricting their applicability in diverse real-world environments.

To address this, researchers from NVIDIA and UC San Diego have developed AnyTeleop, a computer vision-based teleoperation system that can be used in a variety of scenarios, with different robotic arms and hands.

Moving beyond Specific Robots and Environments

AnyTeleop was designed with a key aim to be low-cost, easy to deploy, and generalizable across different tasks, environments, and robotic systems.

Traditional teleoperation systems often require significant investment in terms of sensory hardware and are restricted to particular robots and environments.

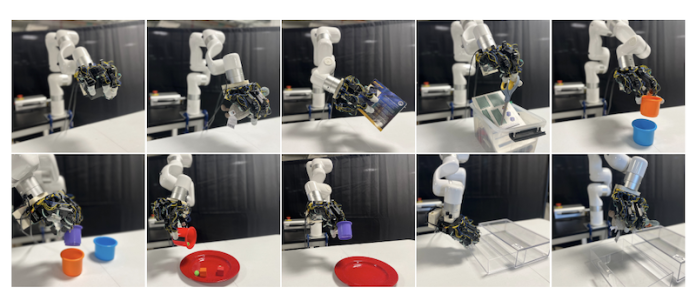

In contrast, AnyTeleop’s system tracks human hand movements using single or multiple cameras and transfers this motion to control multi-fingered robot hands, using a CUDA-powered motion planner for robot arm control.

The system was trained using both virtual robots in simulated environments and real robots in a physical setting.

Benefits and Applications of AnyTeleop

AnyTeleop stands out due to its ability to interface with a variety of robot arms, hands, camera configurations, and different simulated or real-world environments.

It can be used for both nearby and distant locations. The platform is also designed to collect human demonstration data, representing human actions when performing specific tasks.

This data can then be used to better train robots to complete these tasks autonomously.

Dieter Fox, senior director of robotics research at NVIDIA, said, “One potential application is to deploy virtual environments and virtual robots in the cloud, allowing users with entry-level computers and cameras to teleoperate them.

This could revolutionize the data pipeline for teaching robots new skills.”

Promising Initial Results and Future Plans

In its initial testing, AnyTeleop outperformed an existing teleoperation system specifically designed for a particular robot, showcasing its potential in enhancing teleoperation applications.

NVIDIA plans to release an open-source version of AnyTeleop, which will allow research teams worldwide to apply it to their robots.

The next focus for the team will be using the collected data to explore further robot learning and overcoming domain gaps when transferring robot models from simulated environments to the real world.Top of Form

The study was published in arXiv.

Follow us on Twitter for more articles about this topic.

Copyright © 2023 Knowridge Science Report. All rights reserved.