A team of innovative engineers from the University of California San Diego (UCSD) has made a groundbreaking stride in robotics.

They’ve built a robotic hand that can handle and rotate a variety of objects using only its sense of touch, just like us humans.

This is a big deal because the robot can now do all these things without depending on vision.

This kind of technology could be extremely useful for robots working in the dark or in situations where seeing isn’t possible.

The UCSD engineers showed off their fascinating work at the 2023 Robotics: Science and Systems Conference. You can also find their detailed research paper online.

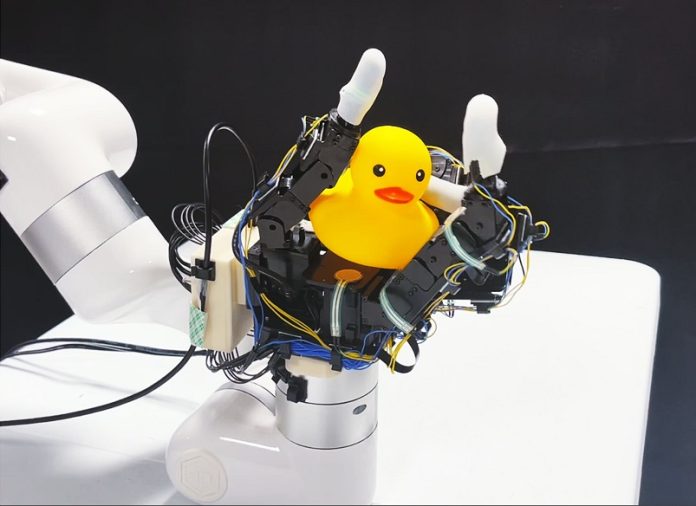

So, how did they pull it off? They started by fitting a robot hand with four fingers with a total of 16 touch sensors.

These sensors were spread out across the hand’s palm and fingers. Each sensor only cost around $12 and had one simple job: to figure out if it was touching something or not.

The beauty of this method is that it relies on a large number of inexpensive, basic touch sensors to rotate objects in the hand.

This is a change from previous attempts where they used a few expensive, detailed touch sensors attached to the fingertips of the robot hand.

Xiaolong Wang, an electrical and computer engineering professor at UCSD, was the mastermind behind this study. He explained that previous attempts had several issues.

First, having fewer sensors made it less likely for the robot hand to touch the object, limiting the sensing capability. Second, high-detail touch sensors that could sense texture were very hard to reproduce and very expensive, which made it difficult to use them in real-life experiments.

Lastly, a lot of the older methods still depended on vision.

Wang’s team chose a simpler route. They found that they didn’t need to know every little detail about an object’s texture to handle and rotate it.

All they needed were simple touch or no-touch signals, which are much easier to simulate and use in the real world.

This method gives the robot hand enough info about an object’s 3D shape and orientation to rotate it successfully without needing vision.

They trained the system using a virtual robot hand and a variety of objects with different shapes.

The system kept track of which sensors were touching the object during the rotation, and the positions of the hand’s joints, and their past actions. Using all this data, the system guided the robot hand in its next movement.

The team then tested the hand with real objects the system hadn’t yet encountered. The robot hand successfully rotated a range of objects like a tomato, pepper, a can of peanut butter, and even a rubber duck toy (which was a bit tricky because of its shape).

This isn’t the end of the road for Wang and his team. They are now trying to expand their method to more complex tasks. They’re working on giving robot hands the ability to catch, throw, and even juggle!

Handling objects is something that comes naturally to us humans, but it’s very tricky for robots. If we can equip robots with this ability, imagine all the cool tasks they could do!

It’s a big leap forward in the field of robotics, and we can’t wait to see where this goes next.

Follow us on Twitter for more articles about this topic.