Have you ever wondered how your smartphone or other portable devices can do so many cool things, like recognize your voice or face?

This is because of artificial intelligence (AI) running on these devices. But there’s a limit to what your device can do, as it’s held back by the hardware inside.

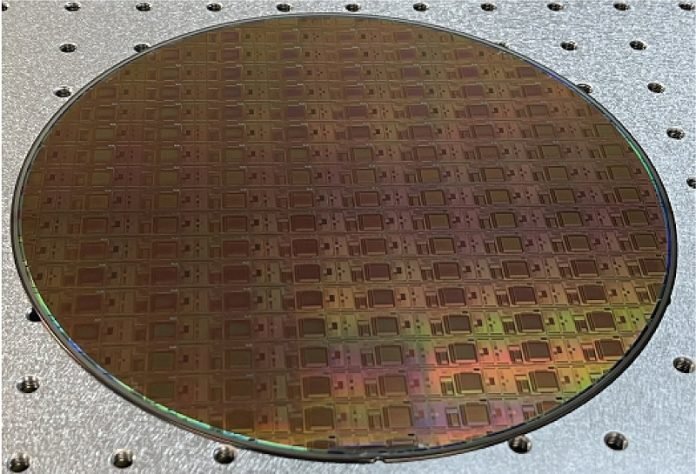

Now, a group of researchers led by Professor Joshua Yang from USC might change this by creating a new type of chip with the best memory ever for portable AI devices.

Right now, AI and data science need bigger and more complex networks to work, but the hardware inside our devices can’t keep up.

This has become a problem that many people are trying to solve.

Some are working on improving existing silicon chips, while others are experimenting with new materials and devices. Yang’s work is in the middle—using new materials and traditional silicon technology to make chips that can handle heavy AI computations.

In a recent paper, Yang and his team, including researchers from USC, MIT, and the University of Massachusetts, showed how to reduce “noise” in devices, which helps to increase memory capacity.

They’ve managed to create a memory chip with the highest information density (11 bits) of any known memory technology. These small but powerful chips could make our portable devices even more amazing and only need a small battery to work.

Yang and his colleagues are making chips that combine silicon with metal oxide memristors, which are powerful but use less energy. They’re changing the way information is stored and processed on chips, using the positions of atoms instead of the number of electrons.

This makes it possible to store more information in a smaller space, and the information can be processed right where it’s stored. This eliminates a major bottleneck in current computing systems, making AI computing more energy-efficient and faster.

Unlike traditional chips that store memory using electrons, which are “light” and can move around, causing issues, Yang and his team are storing memory in full atoms.

This new method doesn’t need battery power to maintain stored information, which saves time and energy. This stable memory is especially important for AI computations.

Yang believes this new technology could help make AI even more powerful in portable devices, like Google Glasses.

With this new method, chips can become smaller, and there’s more computing capacity at a smaller scale. This could lead to “many more levels of memory to help increase information density.”

Imagine having a mini version of powerful AI like ChatGPT on your device! This could make high-powered tech more affordable and accessible for everyone, opening up possibilities for all sorts of applications.