The notion of a large metallic robot that speaks in monotone and moves in lumbering, deliberate steps is somewhat hard to shake.

But practitioners in the field of soft robotics have an entirely different image in mind — autonomous devices composed of compliant parts that are gentle to the touch, more closely resembling human fingers than R2-D2 or Robby the Robot.

That model is now being pursued by Professor Edward Adelson and his Perceptual Science Group at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

In a recent project, Adelson and Sandra Liu — a mechanical engineering PhD student at CSAIL — have developed a robotic gripper using novel “GelSight Fin Ray” fingers that, like the human hand, is supple enough to manipulate objects.

What sets this work apart from other efforts in the field is that Liu and Adelson have endowed their gripper with touch sensors that can meet or exceed the sensitivity of human skin.

Their work was presented last week at the 2022 IEEE 5th International Conference on Soft Robotics.

The fin ray has become a popular item in soft robotics owing to a discovery made in 1997 by the German biologist Leif Kniese.

He noticed that when he pushed against a fish’s tail with his finger, the ray would bend toward the applied force, almost embracing his finger, rather than tilting away.

The design has become popular, but it lacks tactile sensitivity. “It’s versatile because it can passively adapt to different shapes and therefore grasp a variety of objects,” Liu explains.

“But in order to go beyond what others in the field had already done, we set out to incorporate a rich tactile sensor into our gripper.”

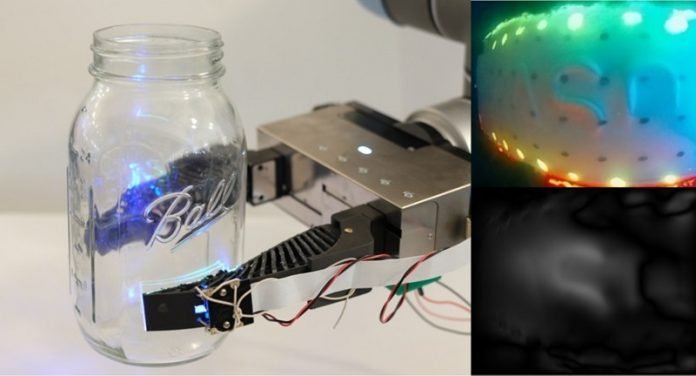

The gripper consists of two flexible fin ray fingers that conform to the shape of the object they come in contact with.

The fingers themselves are assembled from flexible plastic materials made on a 3D printer, which is pretty standard in the field. However, the fingers typically used in soft robotic grippers have supportive cross-struts running through the length of their interiors, whereas Liu and Adelson hollowed out the interior region so they could create room for their camera and other sensory components.

The camera is mounted to a semirigid backing on one end of the hollowed-out cavity, which is, itself, illuminated by LEDs. The camera faces a layer of “sensory” pads composed of silicone gel (known as “GelSight”) that is glued to a thin layer of acrylic material.

The acrylic sheet, in turn, is attached to the plastic finger piece at the opposite end of the inner cavity. Upon touching an object, the finger will seamlessly fold around it, melding to the object’s contours. By determining exactly how the silicone and acrylic sheets are deformed during this interaction, the camera — along with accompanying computational algorithms — can assess the general shape of the object, its surface roughness, its orientation in space, and the force being applied by (and imparted to) each finger.

Liu and Adelson tested out their gripper in an experiment during which just one of the two fingers was “sensorized.” Their device successfully handled such items as a mini-screwdriver, a plastic strawberry, an acrylic paint tube, a Ball Mason jar, and a wine glass. While the gripper was holding the fake strawberry, for instance, the internal sensor was able to detect the “seeds” on its surface. The fingers grabbed the paint tube without squeezing so hard as to breach the container and spill its contents.

The GelSight sensor could even make out the lettering on the Mason jar, and did so in a rather clever way. The overall shape of the jar was ascertained first by seeing how the acrylic sheet was bent when wrapped around it. That pattern was then subtracted, by a computer algorithm, from the deformation of the silicone pad, and what was left was the more subtle deformation due just to the letters.

Glass objects are challenging for vision-based robots because of the refraction of the light. Tactile sensors are immune to such optical ambiguity. When the gripper picked up the wine glass, it could feel the orientation of the stem and could make sure the glass was pointing straight up before it was slowly lowered. When the base touched the tabletop, the gel pad sensed the contact. Proper placement occurred in seven out of 10 trials and, thankfully, no glass was harmed during the filming of this experiment.

Wenzhen Yuan, an assistant professor in the Robotics Institute at Carnegie Mellon University who was not invovled with the research, says, “Sensing with soft robots has been a big challenge, because it is difficult to set up sensors — which are traditionally rigid — on soft bodies,” Yuan says. “This paper provides a neat solution to that problem.

The authors used a very smart design to make their vision-based sensor work for the compliant gripper, in this way generating very good results when robots grasp objects or interact with the external environment. The technology has lots of potential to be widely used for robotic grippers in real-world environments.”

Liu and Adelson can foresee many possible applications for the GelSight Fin Ray, but they are first contemplating some improvements.

By hollowing out the finger to clear space for their sensory system, they introduced a structural instability, a tendency to twist, that they believe can be counteracted through better design.

They want to make GelSight sensors that are compatible with soft robots devised by other research teams. And they also plan to develop a three-fingered gripper that could be useful in such tasks as picking up pieces of fruit and evaluating their ripeness.

Tactile sensing, in their approach, is based on inexpensive components: a camera, some gel, and some LEDs. Liu hopes that with a technology like GelSight, “it may be possible to come up with sensors that are both practical and affordable.” That, at least, is one goal that she and others in the lab are striving toward.

The Toyota Research Institute and the U.S. Office of Naval Research provided funds to support this work.

Written by Rachel Gordon.