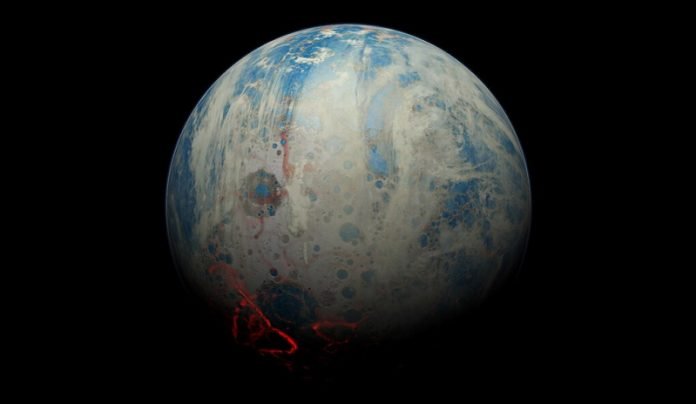

Researchers at Yale and Caltech have a bold new theory to explain how Earth transformed itself from a fiery, carbon-clouded ball of rocks into a planet capable of sustaining life.

The theory covers Earth’s earliest years and involves “weird” rocks that interacted with seawater in just the right way to nudge biological matter into existence.

“This period is the most enigmatic time in Earth history,” said Jun Korenaga, a professor of Earth and planetary sciences at Yale and co-author of a new study in the journal Nature.

“We’re presenting the most complete theory, by far, for Earth’s first 500 million years.”

The study’s first author is Yoshinori Miyazaki, a former Yale graduate student who is now a Stanback Postdoctoral Fellow at Caltech. The study is based on the final chapter of Miyazaki’s Yale dissertation.

Most scientists believe that Earth began with an atmosphere much like that of the planet Venus.

Its skies were filled with carbon dioxide — more than 100,000 times the current level of atmospheric carbon — and Earth’s surface temperature would have exceeded 400 degrees Fahrenheit.

Biological life would have been unable to form, much less survive, under such conditions, scientists agree.

“Somehow, a massive amount of atmospheric carbon had to be removed,” Miyazaki said. “Because there is no rock record preserved from the early Earth, we set out to build a theoretical model for the very early Earth from scratch.”

Miyazaki and Korenaga combined aspects of thermodynamics, fluid mechanics, and atmospheric physics to build their model. Eventually, they settled on a pretty bold proposition: early Earth was covered with rocks that do not currently exist on Earth.

“These rocks would have been enriched in a mineral called pyroxene, and they likely had a dark greenish color,” Miyazaki said. “More importantly, they were extremely enriched in magnesium, with a concentration level seldom observed in present-day rocks.”

Miyazaki said magnesium-rich minerals react with carbon dioxide to produce carbonates, thereby playing a key role in sequestering atmospheric carbon.

The researchers suggest that as the molten Earth started to solidify, its hydrated, wet mantle — the planet’s 3,000-kilometer-thick rocky layer — convected vigorously. The combination of a wet mantle and high-magnesium pyroxenites dramatically sped up the process of pulling CO2 out of the atmosphere.

In fact, the researchers said the rate of atmospheric carbon sequestration would have been more than 10 times faster than would be possible with a mantle of modern-day rocks, requiring a mere 160 million years.

“As an added bonus, these ‘weird’ rocks on the early Earth would readily react with seawater to generate a large flux of hydrogen, which is widely believed to be essential for the creation of biomolecules,” Korenaga said.

The effect would be similar to a rare type of modern, deep-sea thermal vent, called the Lost City hydrothermal field, located in the Atlantic Ocean. The Lost City hydrothermal field’s abiotic production of hydrogen and methane has made it a prime location for investigating the origin of life on Earth.

“Our theory has the potential to address not just how Earth became habitable, but also why life emerged on it,” Korenaga added.

Grants from the National Aeronautics and Space Administration and the National Science Foundation helped to fund the research.

Written by Jim Shelton.